Introduction: From Access to Authority

For most of the modern internet era, the idea of the digital divide was simple and tangible. It was about access. Who had the internet and who did not. Who owned a computer, who could afford a smartphone, digital divide control technology who lived in a connected city and who lived in a disconnected rural area. Access was visible, measurable, and easy to debate. If someone lacked technology, the solution seemed obvious: provide infrastructure, lower costs, expand coverage.

Over time, that gap began to narrow. Smartphones became cheaper. Mobile internet spread rapidly. Digital services became central to education, banking, communication, and work. In many parts of the world, being offline is now the exception rather than the rule. From the outside, it looks like the digital divide is shrinking.

But it is not disappearing. It is mutating.

The next digital divide will not be defined by who can get online. It will be defined by who controls the systems that shape digital life—and who merely lives inside them.

Access Is No Longer the Real Advantage

Today, two people can use the same phone, the same apps, and the same platforms, yet experience completely different levels of power and autonomy. Both may have internet access. Both may rely on AI-powered services. Both may be deeply embedded in digital ecosystems. But only one may understand how decisions are made, how data is used, and how outcomes can be challenged.

digital divide control technology Access, once the primary marker of inequality, has become the baseline. Control has replaced it as the real advantage.

Modern technology does not simply respond to users. It anticipates them. It filters information. It prioritizes certain options and hides others. It nudges behavior subtly, persistently, and at massive scale. These systems operate quietly, often invisibly, and most users never question them because the experience feels smooth and convenient.

This is where the new divide begins.

From Tools We Use to Systems That Shape Us

Earlier generations of technology behaved like tools. You picked them up, used them for a task, and put them down. The power dynamic was clear: humans gave instructions, machines followed.

digital divide control technology ,Today’s AI-driven systems reverse that relationship in subtle ways. Instead of waiting for commands, they observe patterns. Instead of reacting, they predict. Instead of offering neutral tools, they present curated realities.

Your news feed does not simply display information. It decides what matters. Your navigation app does not just show routes. It chooses the fastest path and quietly discourages alternatives. Your streaming platform does not wait for your preferences. It learns them, reinforces them, and reshapes them over time.

These systems are no longer passive. They are active participants in decision-making, and that shifts control away from users who do not understand how those decisions are formed.

What “Control” Actually Means in the Digital Age

Control does not always look like dominance or ownership. In the AI era, it often looks like influence without visibility.

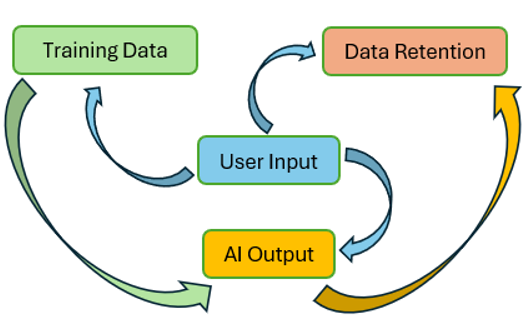

Control means having the ability to question outcomes. It means understanding why a recommendation appeared, why a decision was made, or why an option was removed. It means being able to change defaults, override automation, or opt out without penalty. It also means knowing where your data goes,

how long it is kept, and how it is used to shape future decisions.As MIT Technology Review has reported, AI systems are increasingly shaping decisions in ways that are difficult for users to see or challenge

For most users, these layers are hidden. Interfaces are designed to be friendly, not transparent. Automation is framed as help, not authority. Over time, people stop asking questions because the system “just works.”

That is when control quietly disappears.

Algorithms as Invisible Authorities

Algorithms increasingly function as silent gatekeepers. They influence who gets hired, who gets credit, which content spreads, which voices are amplified, and which are ignored. They rank, score, predict, and filter at a scale no human institution ever could.

Yet these systems rarely explain themselves.

When a loan application is rejected, a job application filtered out, or content suppressed, users often receive no meaningful justification. The logic lives inside models that are too complex, too proprietary, or too opaque to challenge.According to the Stanford AI Index Report, AI adoption is accelerating across society, often without corresponding increases in transparency or user control.

This creates a power imbalance. Decisions that once involved human judgment and accountability are now mediated by systems that feel objective but are anything but neutral.

Those who design, train, and tune these systems hold control. Those who are affected by them often have none.

The New Divide: Operators and Subjects

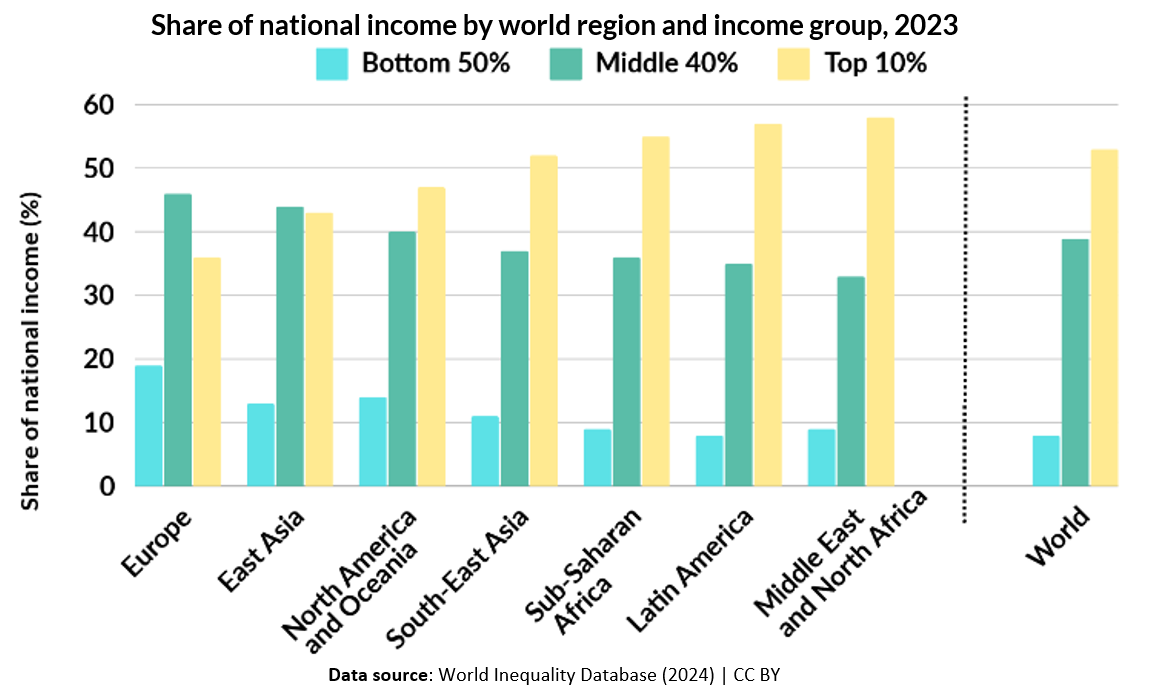

The emerging digital divide separates society into two broad groups.

On one side are the operators: companies, institutions, engineers, and policymakers who design systems, set parameters, interpret outputs, and decide how automation is deployed. They may not control everything, but they understand enough to influence outcomes.

On the other side are the subjects: users whose lives are shaped by automated systems they do not fully understand. They generate data, follow recommendations, and adapt to decisions made elsewhere.

Importantly, this divide is not strictly about wealth or education. A highly educated professional can still be a subject if they lack insight into how digital systems shape their environment. Conversely, a smaller organization with technical literacy can exert outsized control.

The gap is cognitive and structural, not just economic.

Convenience: The Fastest Way to Lose Control

Convenience is the most underestimated force in modern technology. Every time a system removes friction, it also removes awareness.

Autoplay eliminates the decision to continue watching. Smart replies remove the effort of composing a message. Default settings quietly guide behavior without explicit consent. Over time, users trade agency for ease, often without realizing it.

Also Read AI Is Quietly Changing Everything—and Most People Haven’t Noticed

AI thrives in these conditions. The less users intervene, the more systems learn. The more systems learn, the more confident their predictions become. Eventually, behavior feels guided rather than chosen.

Convenience does not feel like coercion. That is why it is so powerful.

Why Digital Literacy Is No Longer Enough

For years, digital literacy focused on basic competence: using software, navigating the web, avoiding obvious scams. That framework is outdated.

The next digital divide depends on AI literacy, which includes understanding how recommendations are formed, recognizing automated decision-making, and knowing when human judgment has been replaced by algorithms.

AI literacy is not about learning to code. It is about knowing when a system is influencing choices and what options exist to challenge or escape that influence.

Without this understanding, access to technology becomes superficial. People can use tools without ever shaping them.

Data Ownership Is the Core of Control

In the digital economy, data is power. It fuels AI systems, refines predictions, and enables personalization at scale. But while users generate enormous amounts of data, they rarely control it.

They do not decide how long it is stored, how it is combined with other datasets, or how it influences decisions made about them. Terms of service obscure these realities behind legal language few people read or understand.

Those who control data pipelines control the future behavior of AI systems. This concentration of power defines the next digital divide more clearly than access ever did.

Connectivity gave people a voice. Data ownership determines whether that voice has weight.

AI Amplifies Existing Power Structures

AI does not emerge in isolation. It reflects the incentives, values, and structures of the institutions that build it.

Organizations with capital, infrastructure, and influence can train better models, deploy them faster, and shape markets more effectively. Smaller players, individuals, and marginalized communities often adapt to systems they did not help create.

Without intentional design choices, AI tends to reinforce existing inequalities rather than dismantle them. Automation scales whatever biases, assumptions, and priorities are embedded within it.

This is not an abstract concern. It is already visible in hiring systems, content moderation, financial scoring, and predictive policing tools.

Why This Divide Is Harder to See

The access divide was visible. You could count connections, devices, and coverage maps. The control divide is subtle.

It hides behind:

-

Friendly interfaces

-

Personalized experiences

-

Helpful suggestions

-

Claims of objectivity

People do not feel excluded. They feel assisted. And when systems appear helpful, questioning them feels unnecessary or even ungrateful.

This invisibility makes the new divide harder to debate and harder to correct.

Why Regulation Alone Cannot Solve the Problem

Governments are beginning to respond with AI regulations, data protection laws, and transparency requirements. These efforts matter, but they move slowly compared to technological change.

True control cannot come solely from policy. It must be built into systems themselves through explainability, user agency, meaningful consent, and the ability to override automated decisions.

Without these design principles, regulation becomes reactive rather than protective, addressing harm only after it has already scaled.

The Risk of a Passive Digital Society

A society that does not understand how decisions are made cannot meaningfully challenge them. When automation becomes invisible, accountability weakens. Errors scale faster. Trust erodes quietly.

The greatest risk is not malicious AI. It is unexamined AI—systems that shape lives without scrutiny because they are too convenient, too complex, or too normalized to question.

That is the true danger of the next digital divide.

What a Control-Oriented Digital Future Could Look Like

A healthier digital future does not reject AI. It demands balance.

Control means transparency without overwhelming complexity. It means users know when automation is involved and have real choices. It means data can move with people rather than trapping them inside platforms.

Most importantly, it means education evolves beyond usage toward understanding.

Control is not about doing everything manually. It is about knowing when machines decide—and why.

Why This Moment Matters Now

The systems being built today will define the next decade. Once AI infrastructure becomes embedded, it is difficult to reverse. Defaults harden. Habits form. Power structures solidify.

This is the moment when awareness matters most—before control disappears entirely behind convenience.

Conclusion: The Divide That Will Define the Future

The last digital divide asked who could get online. The next one asks who gets a say.

Everyone may have access. Everyone may use AI. Everyone may live inside digital systems.

But only some will understand them. Only some will influence them. Only some will control them.

That difference—not connectivity—will define inequality in the digital age.