Introduction: A Quiet but Major Shift in AI Strategy

For years, cloud computing has been the backbone of artificial intelligence. on-device AI vs cloud AI, From voice assistants and photo recognition to large language models, most AI workloads were processed on powerful remote servers. Devices like smartphones, laptops, and wearables acted mainly as gateways—sending data to the cloud and waiting for responses.

But that model is changing.

Big tech companies such as Apple, Google, Meta, Microsoft, and Qualcomm are now aggressively investing in on-device AI, where artificial intelligence runs directly on your phone, laptop, or wearable—without relying heavily on cloud servers.

This shift isn’t about replacing the cloud entirely. It’s about rebalancing where intelligence lives, and the reasons behind it reveal where consumer technology is heading next.

What Is On-Device AI?

On-device AI (also called edge AI) refers to artificial intelligence models that run locally on hardware such as:

-

Smartphones

-

Laptops and tablets

-

Smart glasses and wearables

-

IoT devices

Instead of sending raw data to cloud servers, the device itself performs tasks like:

-

Voice recognition

-

Image and video processing

-

Language translation

-

Personalization and recommendations

-

Context-aware automation

This is made possible by specialized chips like NPUs (Neural Processing Units), AI accelerators, and increasingly powerful mobile processors.

Why the Cloud-First AI Model Is Reaching Its Limits

Cloud-based AI enabled rapid progress, but it also introduced problems that are becoming harder to ignore.

Latency and Speed Issues

Cloud AI depends on:

-

Internet connectivity

-

Network quality

-

Server response times

Even small delays matter when AI is meant to feel instant—especially for voice commands, real-time translation, or augmented reality.

On-device AI removes that delay entirely.

Rising Costs for Companies

Running AI models in the cloud is expensive. Costs include:

-

Server infrastructure

-

Energy consumption

-

Bandwidth

-

Scaling for millions of users

As AI usage explodes, these costs grow rapidly. On-device processing shifts much of that expense away from data centers.

Google has highlighted the growing importance of on-device AI for faster performance and improved privacy.

Privacy Concerns

Consumers and regulators are increasingly wary of:

-

Personal data being uploaded to servers

-

Audio, images, and messages being analyzed remotely

-

AI systems storing sensitive information

On-device AI allows data to stay local, reducing privacy risks and regulatory pressure.

Privacy Is the Biggest Driver of On-Device AI

Privacy has become a competitive advantage.

Local Processing, Local Control

With on-device AI:

-

Voice commands don’t need to leave the device

-

Photos are analyzed locally

-

Personal habits stay private

-

Sensitive data isn’t stored remotely

This approach aligns with stricter data protection laws and growing consumer awareness.

Apple’s Privacy-First Strategy

Apple has been one of the strongest advocates for on-device AI, emphasizing:

-

Face recognition processed locally

-

On-device photo classification

-

Offline voice commands

-

Minimal cloud dependency

Other companies are now following the same path.

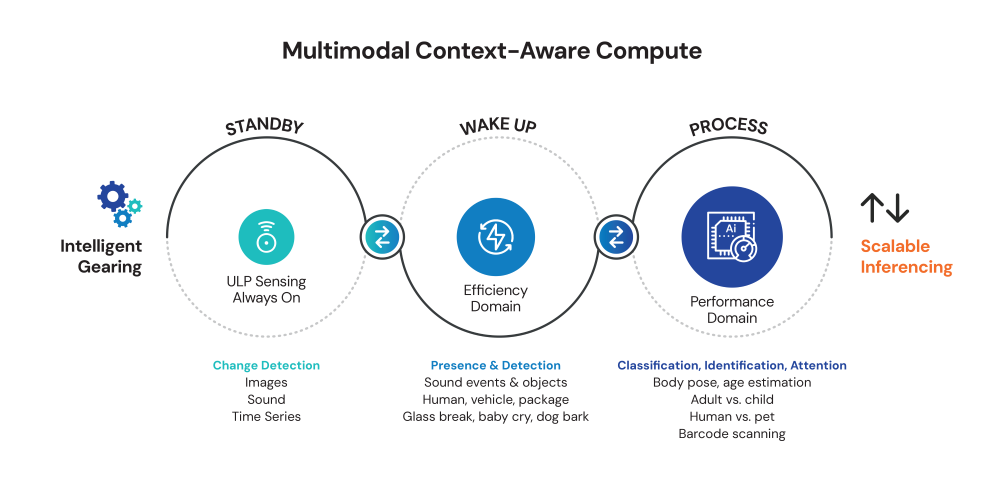

Speed and Reliability Matter More Than Ever

AI features increasingly need to work:

-

Instantly

-

Offline

-

In real time

Where On-Device AI Excels

-

Voice typing without lag

-

Camera enhancements while recording

-

Real-time translation

-

Augmented reality overlays

-

Smart notifications and automation

Cloud AI struggles in these scenarios due to latency and connectivity limitations.

According to MIT Technology Review, on-device AI is becoming essential as privacy concerns and latency issues grow.

AI Chips Are Getting Extremely Powerful

The rise of on-device AI wouldn’t be possible without massive advances in hardware.

Specialized AI Hardware

Modern devices now include:

-

Neural Processing Units (NPUs)

-

AI accelerators

-

Dedicated machine learning cores

These components are designed specifically for AI workloads, offering:

-

High performance

-

Low power consumption

-

Real-time processing

As chips improve, the gap between cloud and device-based AI continues to shrink.

Energy Efficiency and Battery Life

Cloud AI doesn’t just cost money—it consumes enormous amounts of energy.

You May Also Read: Why Budget Smartphones Are Getting Better Than Flagships

Why On-Device AI Is More Efficient

-

Less data transmission

-

Reduced server usage

-

Optimized hardware acceleration

For mobile devices, this often translates into better battery life, since tasks are completed faster and more efficiently.

Offline AI Is a Huge Advantage

Cloud AI fails when connectivity does.

On-device AI enables:

-

Offline translation

-

Local voice assistants

-

Camera processing without internet

-

Smart features while traveling

For global users and emerging markets, this is a major improvement.

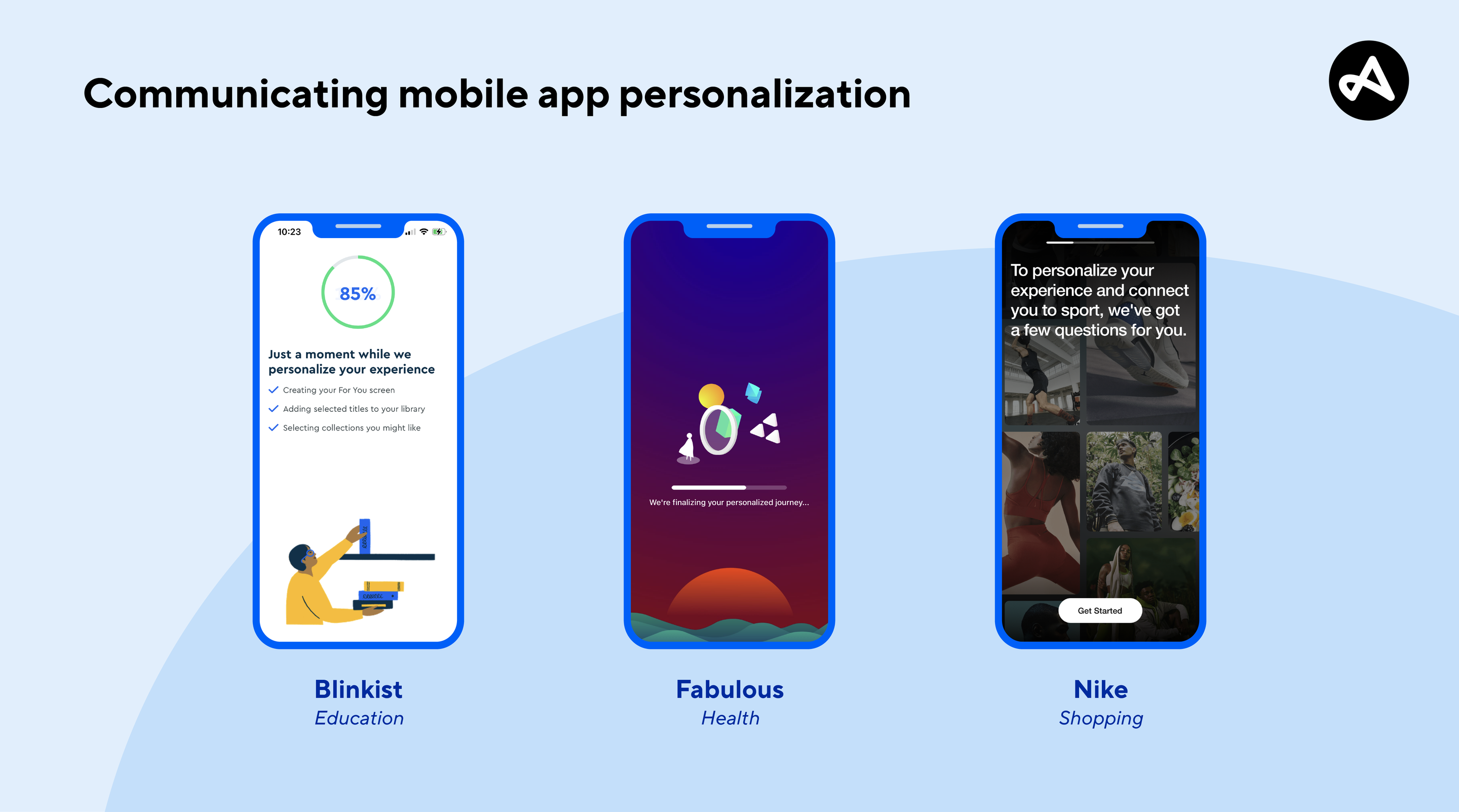

Personalization Works Better On the Device

AI becomes more useful when it understands the user deeply—but personalization often requires sensitive data.

Why On-Device AI Wins

-

Learns user habits privately

-

Adapts to individual behavior

-

Doesn’t require data sharing

-

Feels more personal and contextual

This allows AI to be smarter without being invasive.

Regulation Is Pushing AI to the Edge

Governments worldwide are tightening rules around:

-

Data collection

-

AI transparency

-

User consent

-

Cross-border data transfers

On-device AI helps companies:

-

Reduce regulatory risk

-

Simplify compliance

-

Avoid data localization issues

In many cases, keeping data on the device is simply easier than managing global cloud infrastructure under strict laws.

The Cloud Isn’t Going Away—It’s Changing Roles

Despite the shift, cloud AI still plays a crucial role.

What the Cloud Will Handle

-

Training large AI models

-

Heavy computational tasks

-

Cross-device synchronization

-

Large-scale analytics

What Devices Will Handle

-

Real-time inference

-

Personal data processing

-

Context-aware intelligence

-

Everyday AI features

The future is hybrid AI, not cloud-only.

Why Big Tech Is Moving Now

Several trends are converging at once:

-

Hardware is powerful enough

-

AI models are becoming smaller and more efficient

-

Privacy expectations are higher

-

Cloud costs are increasing

-

Competition demands differentiation

On-device AI solves multiple problems at once.

What This Means for Consumers

For users, this shift results in:

-

Faster AI features

-

Better privacy

-

More reliable experiences

-

Less dependence on internet connectivity

-

Smarter devices that feel more personal

Most users may never notice the technical change—but they’ll feel the benefits.

What This Means for Developers

Developers must adapt to:

-

Optimizing AI models for local hardware

-

Balancing on-device and cloud workloads

-

Designing privacy-first experiences

-

Supporting multiple chip architectures

While more complex, this approach enables more responsive and trusted applications.

Challenges of On-Device AI

On-device AI isn’t without limitations.

Current Challenges

-

Limited processing compared to cloud servers

-

Model size constraints

-

Hardware fragmentation

-

Slower updates than cloud models

However, rapid hardware and software improvements are addressing these issues quickly.

The Long-Term Vision: Invisible AI

The ultimate goal of on-device AI is invisible intelligence—AI that:

-

Works quietly in the background

-

Anticipates needs

-

Enhances experiences without being intrusive

Instead of interacting with AI through prompts and commands, users will simply experience smarter devices.

Conclusion: Intelligence Is Moving Closer to You

Big tech’s shift toward on-device AI marks a fundamental change in how artificial intelligence is delivered. By bringing AI closer to users, companies can offer faster, more private, more reliable experiences—while reducing costs and regulatory risks.

The cloud remains essential, but the future belongs to hybrid AI systems where intelligence is distributed intelligently across devices and servers.

In the coming years, the smartest AI won’t live in distant data centers—it will live quietly inside the devices you already use every day.

Pingback: AI Is Quietly Changing Everything—and Most People Haven’t Noticed - Nxtainews