Introduction: Digital Safety Now Depends on AI Understanding

Artificial intelligence is no longer operating in the background—it actively shapes what we see, believe, buy, learn, and decide. From social media algorithms and recommendation systems to facial recognition, automated hiring, and generative AI, these technologies influence billions of people every day.

Yet despite AI’s growing power, most users do not understand how these systems work, where their data goes, or how AI can be misused. This gap between AI capability and public understanding has become one of the greatest digital safety risks of our time.

Building trustworthy AI systems and improving AI literacy is now essential—not only to protect individuals from scams, misinformation, and surveillance, but to safeguard social stability, democracy, and human agency in a digital world.

In the AI era, ignorance is no longer neutral—it is dangerous.

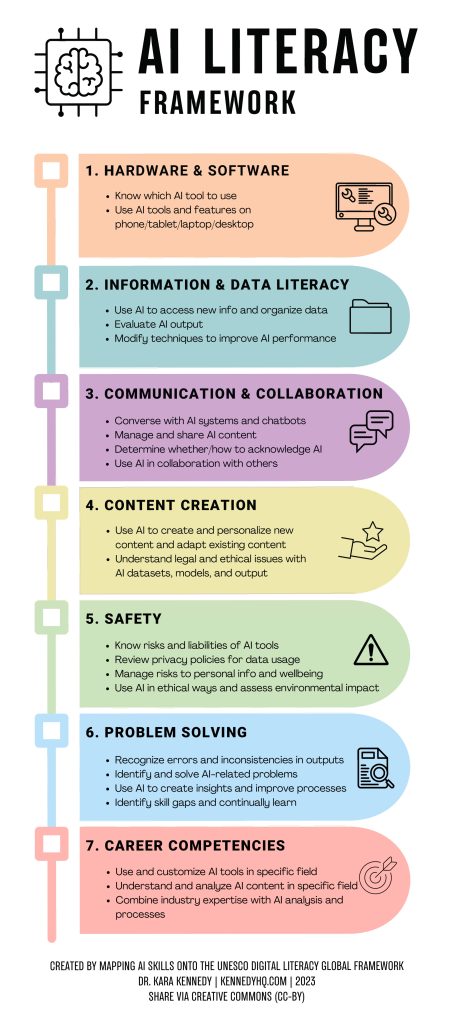

What AI Literacy Really Means (And Why It Matters)

AI literacy is not about learning to code. It is about understanding how AI affects daily life and decision-making.

AI literacy includes the ability to:

-

Understand what AI can and cannot do

-

Recognize bias, errors, and manipulation

-

Identify AI-generated content

-

Know how personal data is collected and used

-

Question automated decisions

According to UNESCO, AI literacy is becoming a core civic skill, essential for informed participation in modern societies.

👉 https://www.unesco.org/en/artificial-intelligence

Without this understanding, people are more vulnerable to digital harm, exploitation, and misinformation.

Trust in AI: Why Blind Reliance Is a Serious Risk

Trust is essential for technology adoption—but blind trust in AI systems is one of the biggest threats to digital safety.

Trust Must Be Earned, Not Assumed

Many AI systems are perceived as objective or neutral. In reality, they are shaped by:

-

Human-designed rules

-

Historical data

-

Economic and political incentives

As the World Economic Forum warns, uncritical reliance on AI can amplify inequality, bias, and social harm if systems are not transparent and accountable.

👉 https://www.weforum.org/topics/artificial-intelligence/

Healthy trust requires:

-

Transparency in how systems work

-

Clear accountability for outcomes

-

Human oversight in high-risk decisions

Without these safeguards, AI becomes a source of hidden power rather than public benefit.

You May Also Read : The Future of Education in a Rapidly Changing World

Why AI Literacy Is Essential for Digital Safety

1. Fighting Misinformation, Deepfakes, and Digital Manipulation

Generative AI can now produce realistic text, images, audio, and video at scale. This has dramatically increased the spread of:

-

Deepfakes

-

Scams and fraud

-

Political misinformation

-

Fake news

The OECD has identified AI-driven misinformation as a growing threat to public trust and democratic institutions.

👉 https://www.oecd.org/digital/artificial-intelligence/

AI-literate users are more likely to:

-

Verify sources

-

Question viral content

-

Avoid spreading false information

Digital safety depends on informed skepticism, not passive consumption.

2. Reducing Bias and Harm in Automated Decisions

AI systems are increasingly used in:

-

Hiring and recruitment

-

Credit scoring and lending

-

Healthcare diagnostics

-

Law enforcement and surveillance

Because AI learns from historical data, it can reproduce and amplify existing discrimination. Research highlighted by MIT Technology Review shows how biased datasets can lead to harmful outcomes at scale.

👉 https://www.technologyreview.com/artificial-intelligence/

AI literacy empowers people to:

-

Challenge unfair decisions

-

Demand transparency

-

Advocate for ethical safeguards

Trustworthy AI requires informed users who understand that algorithms are not infallible.

3. Protecting Privacy and Personal Data

AI systems depend on massive data collection. Without AI literacy, users often:

-

Share sensitive information unknowingly

-

Accept invasive tracking

-

Lose control over digital identities

The Electronic Frontier Foundation (EFF) emphasizes that understanding data practices is critical to protecting digital rights.

👉 https://www.eff.org/issues/artificial-intelligence

Digital safety begins with awareness of how personal data fuels AI systems.

The Social Cost of Low AI Literacy

Low AI literacy doesn’t just harm individuals—it weakens societies.

Consequences include:

-

Erosion of trust in institutions

-

Over-reliance on automated authority

-

Reduced critical thinking

-

Increased manipulation and polarization

When people cannot distinguish human judgment from algorithmic output, power quietly shifts away from the public.

Building Trustworthy AI Systems: What Must Change

Trust in AI cannot be built through branding or promises. It must be engineered.

Transparency and Explainability

People trust systems they can understand. Explainable AI helps users see:

-

Why decisions were made

-

What data was used

-

Where uncertainty exists

The European Commission identifies transparency as a cornerstone of trustworthy AI governance.

👉 https://digital-strategy.ec.europa.eu/en/policies/european-approach-artificial-intelligence

Human Oversight and Accountability

AI must support human judgment—not replace responsibility.

Trustworthy systems ensure:

-

Humans remain accountable

-

Errors can be corrected

-

Harm can be addressed

Digital safety depends on keeping humans firmly “in the loop.”

Education: The Foundation of AI Literacy

Education systems play a decisive role in shaping AI-ready societies.

Teaching AI as a Civic Skill

AI literacy should be taught alongside:

-

Media literacy

-

Digital citizenship

-

Ethics and critical thinking

The World Bank highlights education as essential for ensuring AI benefits are shared equitably.

👉 https://www.worldbank.org/en/topic/education

Lifelong Learning in the AI Age

Because AI evolves rapidly, literacy must be continuous. Workers, educators, policymakers, and citizens all need ongoing opportunities to update their understanding.

Shared Responsibility: Governments, Institutions, and Individuals

Building trust and literacy in AI requires collective action.

Governments and Institutions Must:

-

Regulate high-risk AI systems

-

Protect digital rights

-

Support public education

-

Enforce accountability standards

Individuals Must:

-

Question AI outputs

-

Verify information

-

Learn how AI tools work

-

Use technology responsibly

Digital safety is strongest when informed citizens meet ethical governance.

Conclusion: In the AI Era, Trust Comes from Understanding

Artificial intelligence will continue to reshape the digital world. The real question is whether people will understand the systems shaping their lives—or remain vulnerable to them.

Building trust and AI literacy is essential for digital safety because:

-

Understanding reduces manipulation

-

Literacy strengthens autonomy

-

Trust protects societies

The future of digital safety will not be secured by smarter machines alone—but by more informed, empowered humans.

Pingback: The Global Reach and Convening Power of Davos: Why the World Economic Forum Shapes Global Agendas - Nxtainews